Interpretable Passive Multi-Modal Sensor Fusion for Human Identification and Activity Recognition

Abstract

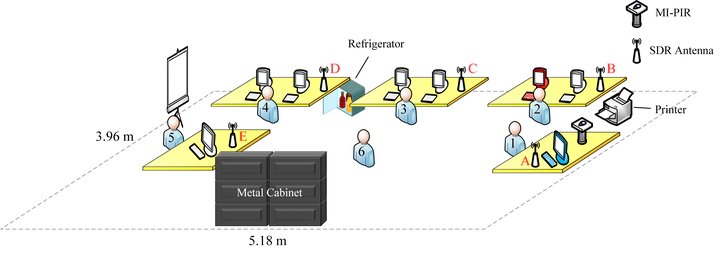

Human monitoring applications in indoor environments depend on accurate human identification and activity recognition (HIAR). Single modality sensor systems have shown to be accurate for HIAR, but there are some shortcomings to these systems, such as privacy, intrusion, and costs. To combat these shortcomings for a long-term monitoring solution, an interpretable, passive, multi-modal, sensor fusion system PRF-PIR is proposed in this work. PRF-PIR is composed of one software-defined radio (SDR) device and one novel passive infrared (PIR) sensor system. A recurrent neural network (RNN) is built as the HIAR model for this proposed solution to handle the temporal dependence of passive information captured by both modalities. We validate our proposed PRF-PIR system for a potential human monitoring system through the data collection of eleven activities from twelve human subjects in an academic office environment. From our data collection, the efficacy of the sensor fusion system is proven via an accuracy of 0.9866 for human identification and an accuracy of 0.9623 for activity recognition. The results of the system are supported with explainable artificial intelligence (XAI) methodologies to serve as a validation for sensor fusion over the deployment of single sensor solutions. PRF-PIR provides a passive, non-intrusive, and highly accurate system that allows for robustness in uncertain, highly similar, and complex at-home activities performed by a variety of human subjects.